Researchers put quantum electrodynamics to the test, pushing the boundaries of the Standard Model of physics.

Our understanding of charged particles’ interactions with each other and electromagnetic fields is based on quantum electrodynamics (QED), which is an integral part of the Standard Model — our best working physical theory to describe the basic building blocks of the universe and their interactions.

But is this theory accurate enough? To find it out, a group of scientists have measured the difference in the magnetic properties of two isotopes of neon confined in a magnetic trap and found complete agreement with the theoretical prediction. A newly developed experimental technique allowed the researchers to improve accuracy by approximately two orders of magnitude compared to previous measurements.

Using quantum electrodynamics, physicists can compute many particle properties with extraordinary precision. One of these quantities is the magnetic moment of the electron, also called the g-factor, which sets the strength of the particle’s interaction with the magnetic field.

The g-factor of an electron not bound to an atomic nucleus has been measured previously. The most precise result obtained by Hanneke, Fogwell and Gabrielse in 2008 had an accuracy of 13 digits, and was consistent with the computation performed at the same level of precision. This is one of the best matches of theory and experiment in the history of physics.

However, if an electron is bound to a nucleus, the interaction between the two affects the value of the g-factor. Quantum electrodynamics does allow one to compute this quantity, however, comparing experiment with prediction in this case is challenging as the accuracy of the measured bound-electron g-factor is limited by uncertainties in the electron and nuclei masses as well as unavoidable fluctuations in the magnetic field of the detector.

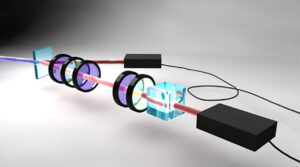

To overcome these difficulties, a team of physicists led by Sven Sturm at Max-Planck-Institut für Kernphysik in Heidelberg designed an experiment to measure the difference between the g-factors of electrons in two highly charged neon ions. The scientists studied the difference between these two values rather than individual g-factors themselves because their calculated values depend on particle masses and magnetic field strengths, which are hard to measure experimentally with sufficient accuracy. In order to validate experimental results, comparison to a theoretically determined value is required, and is relatively simple to determine for the difference between the g-factors.

The researchers used neon ions with only one electron because their theoretical description is very similar to that of hydrogen, the simplest atom, allowing for more accurate computations to compare with experimental results.

“With our work, we have now succeeded in investigating these QED predictions with unprecedented resolution, and partially, for the first time,” said Sturm in an interview. “To do this, we looked at the difference in the g-factor for two isotopes of highly charged neon ions that possess only a single electron.”

The team used a Penning trap, where charged particles are confined using a homogeneous magnetic field and an inhomogeneous electric field. Two different neon ions, separated by about half a millimeter, move along the same circle-like trajectory in the detector. This small distance between them helps ensure the magnetic field fluctuations experienced by both ions almost the same, and the uncertainty in g-factors caused by these uncontrolled fluctuations cancels out in the difference between the two g-factors.

To determine the g-factors of the electrons, the scientists measured a probability for each of them to flip the direction of their spin in response to an applied magnetic pulse. This probability depends on the g-factor and is different for the two ions. The final result provided the difference between the two electron’s g-factors measured with record accuracy of 13 digits, which is around two orders of magnitude higher than the accuracy achieved in previous measurements.

The theoretical calculations to corroborate this result were carried out with similar accuracy and appeared to be in agreement with experiment, with the precision limited solely by the uncertainty in the nuclei’s radii. This once again confirms that quantum electrodynamics is very well suited for describing the interactions of charged subatomic particles and helps confirm the Standard Model of physics.

“In comparison with the new experimental values, we confirmed that the electron does indeed interact with the atomic nucleus via the exchange of photons, as predicted by QED,” explained Zoltán Harman in an interview.

The physicists stressed that their method is currently experimentally limited by magnetic field inhomogeneities but could be significantly improved in the future, possibly extending the precision to 15 digits.

The technique that the physicists developed could also help search for physics beyond the Standard Model, since unknown particles and their interactions could lead to deviations in observational data obtained from quantum electrodynamical computations.

“In the future, the method presented here could allow for a number of novel and exciting experiments, such as the direct comparison of matter and antimatter or the ultra-precise determination of fundamental constants,” said Tim Sailer, first author of the study.

Reference: Tim Sailer, et al., Measurement of the bound-electron g-factor difference in coupled ions, Nature (2022). DOI: 10.1038/s41586-022-04807-w

+ There are no comments

Add yours